Artificial Intelligence (AI)

Artificial Intelligence(AI):

AI is a system which has a ability to do complex things like a human such as visualizing, interpreter of languages, speech recognition and so on. The arduino is also a part of AI to run through different codes in it. So, the neural network is also the one of the example in arduino. In this example, it is feed-forward backpropagation network as this is one of the most commonly used for network concepts.

Artificial Neural Net:

This is a mathematical computing model designed to know how the brain reacts to the sensory inputs. As there is a lot of neurons in a brain where each neuron works in different way and connected with each other. So, same like neurons in a brain, the artificial neural network also work as a brain neurons does. In this artificial neural net, the arduino example neural network is used. So that the complicated mathematics is solved clearly and the each segment of code will learn how to respond to inputs based on examples sets of input and output.

Here is how the code is going to work:

At first setting up the arrays and place the random weights in it.

Then, including the loops that will run through the each item of training data.

After that, making a random order in training data to ensure that there won't be any meeting point on local minimum while running through each iteration.

Then feed the data through the network calculating the activation of the hidden layer nodes, output layer nodes and the errors. Also, the back propagate the errors to the hidden layer.

Then after updating the associated weights. Comparing the error to threshold and decide whether to run another cycle or if the training is complete.

Lastly, send the training data to the the Serial monitor for every thousand cycles.

The input and output training data:

The code:

//Author: Ralph Heymsfeld

//28/06/2018

#include <math.h>

/******************************************************************

* Network

Configuration - customized per network

******************************************************************/

const int PatternCount = 10;

const int InputNodes = 7;

const int HiddenNodes = 8;

const int OutputNodes = 4;

const float LearningRate = 0.3;

const float Momentum = 0.9;

const float InitialWeightMax = 0.5;

const float Success = 0.0004;

const byte Input[PatternCount][InputNodes] = {

{ 1, 1, 1, 1, 1, 1,

0 }, // 0

{ 0, 1, 1, 0, 0, 0,

0 }, // 1

{ 1, 1, 0, 1, 1, 0,

1 }, // 2

{ 1, 1, 1, 1, 0, 0,

1 }, // 3

{ 0, 1, 1, 0, 0, 1,

1 }, // 4

{ 1, 0, 1, 1, 0, 1,

1 }, // 5

{ 0, 0, 1, 1, 1, 1,

1 }, // 6

{ 1, 1, 1, 0, 0, 0,

0 }, // 7

{ 1, 1, 1, 1, 1, 1,

1 }, // 8

{ 1, 1, 1, 0, 0, 1,

1 } // 9

};

const byte Target[PatternCount][OutputNodes] = {

{ 0, 0, 0, 0 },

{ 0, 0, 0, 1 },

{ 0, 0, 1, 0 },

{ 0, 0, 1, 1 },

{ 0, 1, 0, 0 },

{ 0, 1, 0, 1 },

{ 0, 1, 1, 0 },

{ 0, 1, 1, 1 },

{ 1, 0, 0, 0 },

{ 1, 0, 0, 1 }

};

/******************************************************************

* End Network

Configuration

******************************************************************/

int i, j, p, q, r;

int ReportEvery1000;

int RandomizedIndex[PatternCount];

long TrainingCycle;

float Rando;

float Error;

float Accum;

float Hidden[HiddenNodes];

float Output[OutputNodes];

float HiddenWeights[InputNodes+1][HiddenNodes];

float OutputWeights[HiddenNodes+1][OutputNodes];

float HiddenDelta[HiddenNodes];

float OutputDelta[OutputNodes];

float ChangeHiddenWeights[InputNodes+1][HiddenNodes];

float ChangeOutputWeights[HiddenNodes+1][OutputNodes];

void setup(){

Serial.begin(9600);

randomSeed(analogRead(3));

ReportEvery1000 = 1;

for( p = 0 ; p <

PatternCount ; p++ ) {

RandomizedIndex[p]

= p ;

}

}

void loop (){

/******************************************************************

* Initialize HiddenWeights and ChangeHiddenWeights

******************************************************************/

for( i = 0 ; i <

HiddenNodes ; i++ ) {

for( j = 0 ; j

<= InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = 0.0 ;

Rando =

float(random(100))/100;

HiddenWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

/******************************************************************

* Initialize OutputWeights and ChangeOutputWeights

******************************************************************/

for( i = 0 ; i <

OutputNodes ; i ++ ) {

for( j = 0 ; j

<= HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = 0.0 ;

Rando =

float(random(100))/100;

OutputWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

Serial.println("Initial/Untrained Outputs: ");

toTerminal();

/******************************************************************

* Begin training

******************************************************************/

for( TrainingCycle =

1 ; TrainingCycle < 2147483647 ; TrainingCycle++) {

/******************************************************************

* Randomize order of training patterns

******************************************************************/

for( p = 0 ; p

< PatternCount ; p++) {

q =

random(PatternCount);

r =

RandomizedIndex[p] ;

RandomizedIndex[p] = RandomizedIndex[q] ;

RandomizedIndex[q] = r ;

}

Error = 0.0 ;

/******************************************************************

* Cycle through each training pattern in the randomized

order

******************************************************************/

for( q = 0 ; q

< PatternCount ; q++ ) {

p =

RandomizedIndex[q];

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i

< HiddenNodes ; i++ ) {

Accum =

HiddenWeights[InputNodes][i] ;

for( j = 0 ; j

< InputNodes ; j++ ) {

Accum +=

Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] =

1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i

< OutputNodes ; i++ ) {

Accum =

OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j

< HiddenNodes ; j++ ) {

Accum +=

Hidden[j] * OutputWeights[j][i] ;

}

Output[i] =

1.0/(1.0 + exp(-Accum)) ;

OutputDelta[i]

= (Target[p][i] - Output[i]) * Output[i] * (1.0 - Output[i]) ;

Error += 0.5 *

(Target[p][i] - Output[i]) * (Target[p][i] - Output[i]) ;

}

/******************************************************************

* Backpropagate errors to hidden layer

******************************************************************/

for( i = 0 ; i

< HiddenNodes ; i++ ) {

Accum = 0.0 ;

for( j = 0 ; j

< OutputNodes ; j++ ) {

Accum +=

OutputWeights[i][j] * OutputDelta[j] ;

}

HiddenDelta[i]

= Accum * Hidden[i] * (1.0 - Hidden[i]) ;

}

/******************************************************************

* Update Inner-->Hidden Weights

******************************************************************/

for( i = 0 ; i

< HiddenNodes ; i++ ) {

ChangeHiddenWeights[InputNodes][i] = LearningRate * HiddenDelta[i] +

Momentum * ChangeHiddenWeights[InputNodes][i] ;

HiddenWeights[InputNodes][i] += ChangeHiddenWeights[InputNodes][i] ;

for( j = 0 ; j

< InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = LearningRate * Input[p][j] * HiddenDelta[i]

+ Momentum * ChangeHiddenWeights[j][i];

HiddenWeights[j][i] += ChangeHiddenWeights[j][i] ;

}

}

/******************************************************************

* Update Hidden-->Output Weights

******************************************************************/

for( i = 0 ; i

< OutputNodes ; i ++ ) {

ChangeOutputWeights[HiddenNodes][i] = LearningRate * OutputDelta[i] +

Momentum * ChangeOutputWeights[HiddenNodes][i] ;

OutputWeights[HiddenNodes][i] += ChangeOutputWeights[HiddenNodes][i] ;

for( j = 0 ; j

< HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = LearningRate * Hidden[j] * OutputDelta[i] +

Momentum * ChangeOutputWeights[j][i] ;

OutputWeights[j][i] += ChangeOutputWeights[j][i] ;

}

}

}

/******************************************************************

* Every 1000 cycles send data to terminal for display

******************************************************************/

ReportEvery1000 =

ReportEvery1000 - 1;

if

(ReportEvery1000 == 0)

{

Serial.println();

Serial.println();

Serial.print

("TrainingCycle: ");

Serial.print

(TrainingCycle);

Serial.print

(" Error = ");

Serial.println

(Error, 5);

toTerminal();

if

(TrainingCycle==1)

{

ReportEvery1000 = 999;

}

else

{

ReportEvery1000 = 1000;

}

}

/******************************************************************

* If error rate is less than pre-determined threshold then

end

******************************************************************/

if( Error <

Success ) break ;

}

Serial.println ();

Serial.println();

Serial.print

("TrainingCycle: ");

Serial.print

(TrainingCycle);

Serial.print

(" Error = ");

Serial.println

(Error, 5);

toTerminal();

Serial.println

();

Serial.println ();

Serial.println

("Training Set Solved! ");

Serial.println

("--------");

Serial.println ();

Serial.println

();

ReportEvery1000 = 1;

}

void toTerminal()

{

for( p = 0 ; p <

PatternCount ; p++ ) {

Serial.println();

Serial.print

(" Training Pattern: ");

Serial.println

(p);

Serial.print

(" Input ");

for( i = 0 ; i

< InputNodes ; i++ ) {

Serial.print

(Input[p][i], DEC);

Serial.print

(" ");

}

Serial.print

(" Target ");

for( i = 0 ; i

< OutputNodes ; i++ ) {

Serial.print

(Target[p][i], DEC);

Serial.print

(" ");

}

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i

< HiddenNodes ; i++ ) {

Accum =

HiddenWeights[InputNodes][i] ;

for( j = 0 ; j

< InputNodes ; j++ ) {

Accum +=

Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] =

1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i

< OutputNodes ; i++ ) {

Accum =

OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j

< HiddenNodes ; j++ ) {

Accum +=

Hidden[j] * OutputWeights[j][i] ;

}

Output[i] =

1.0/(1.0 + exp(-Accum)) ;

}

Serial.print

(" Output ");

for( i = 0 ; i

< OutputNodes ; i++ ) {

Serial.print

(Output[i], 5);

Serial.print (" ");

}

}

}

Outcome:

TrainingCycle: 5843 Error = 0.00040

Input 1 1 1 1 1 1

0 Target 0 0 0 0 Output 0.00571 0.00034 0.00472 0.00543

Training Pattern: 1

Input 0 1 1 0 0 0

0 Target 0 0 0 1 Output 0.00350 0.00591 0.00454 0.99561

Training Pattern: 2

Input 1 1 0 1 1 0

1 Target 0 0 1 0 Output 0.00227 0.00081 0.99999 0.00107

Training Pattern: 3

Input 1 1 1 1 0 0

1 Target 0 0 1 1 Output 0.00376 0.00637 0.99623 0.99826

Training Pattern: 4

Input 0 1 1 0 0 1

1 Target 0 1 0 0 Output 0.00628 0.99480 0.00121 0.00549

Training Pattern: 5

Input 1 0 1 1 0 1

1 Target 0 1 0 1 Output 0.00214 0.99718 0.00622 0.99632

Training Pattern: 6

Input 0 0 1 1 1 1

1 Target 0 1 1 0 Output 0.00007 0.99716 0.99433 0.00002

Training Pattern: 7

Input 1 1 1 0 0 0

0 Target 0 1 1 1 Output 0.00001 0.99162 0.99312 1.00000

Training Pattern: 8

Input 1 1 1 1 1 1

1 Target 1 0 0 0 Output 0.99144 0.00001 0.00194 0.00016

Training Pattern: 9

Input 1 1 1 0 0 1

1 Target 1 0 0 1 Output 0.99294 0.00768 0.00229 0.99390

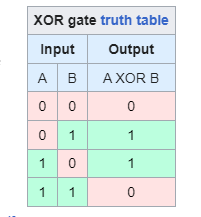

XOR Function:

According to the given XOR above is an example of truth table where A and B are input values (1 and 0) and A XOR B is the resulting output. Likewise, instead of 2 inputs we con also insert 4 input pattern count, 2 input nodes, 3 hidden nodes and 1 output node.const int PatternCount =4;

const int InputNodes = 2;

const int HiddenNodes = 3;

const int OutputNode = 1;

const byte Input[PatternCount][InputNodes] = {

{0,0}, //0

{0,1}, //1

{1,0}, //2

{1,1}, //3

const byte Target[PatternCount][OutputNodes] = {

{0},

{1},

{1},

{0},

Comments

Post a Comment